The value of R required to make the galvanometer measure voltage up to 40V in the diagram above

Question 1 Report

The value of R required to make the galvanometer measure voltage up to 40V in the diagram above

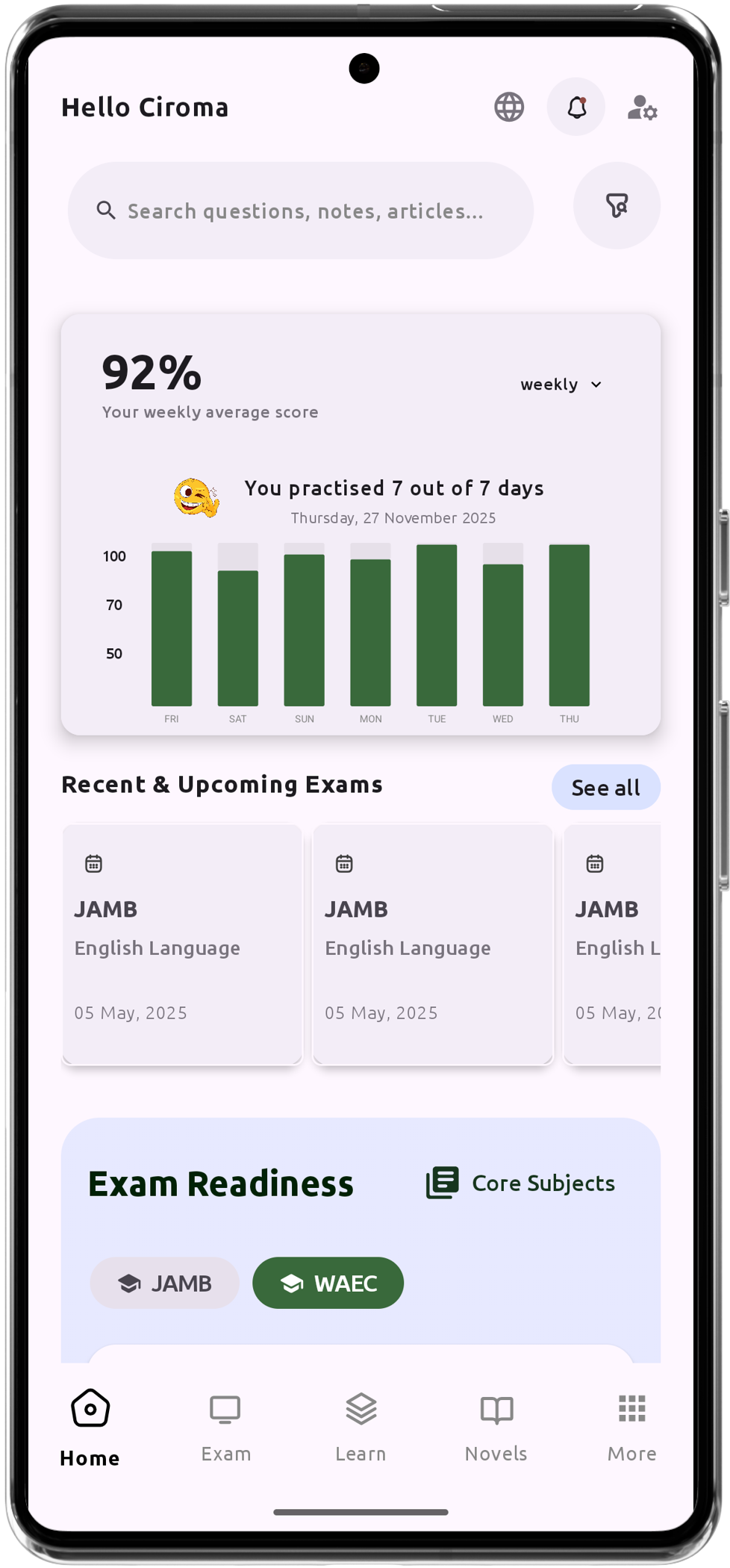

Download The App On Google Playstore

Everything you need to excel in JAMB, WAEC & NECO

Personalized AI Learning Chat Assistant

Thousands of JAMB, WAEC & NECO Past Questions

Over 1200 Lesson Notes

Offline Support - Learn Anytime, Anywhere

Green Bridge Timetable

Literature Summaries & Potential Questions

Track Your Performance & Progress

In-depth Explanations for Comprehensive Learning